Algebraic Geometry and Statistical Learning Theory (Cambridge Monographs on Applied and Computational Mathematics, Series Number 25)

D**N

Excellent

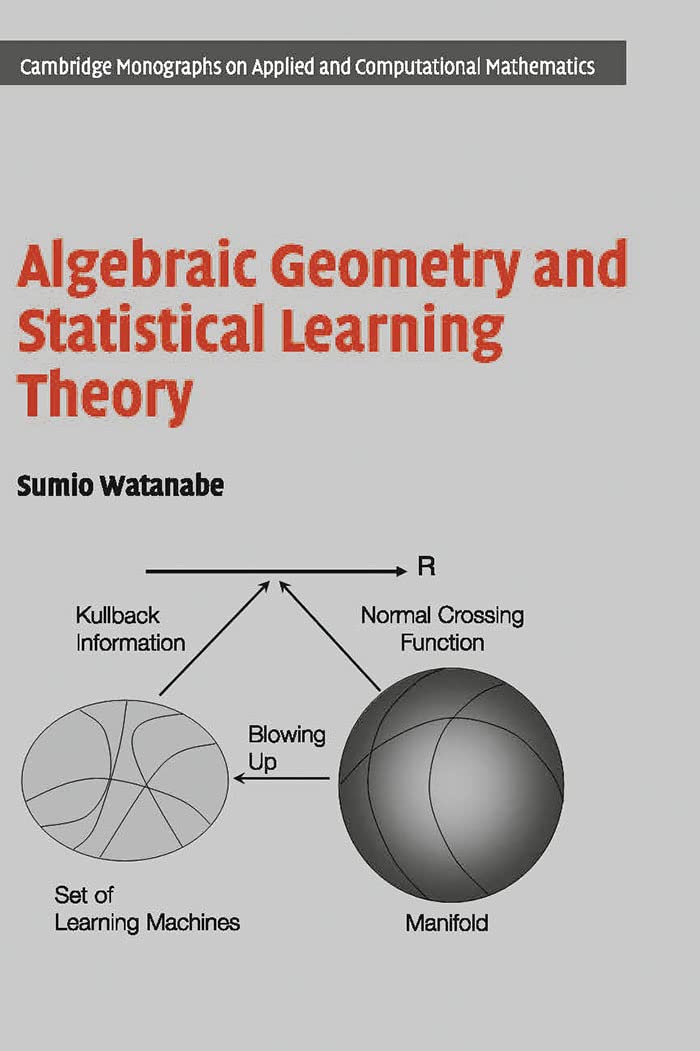

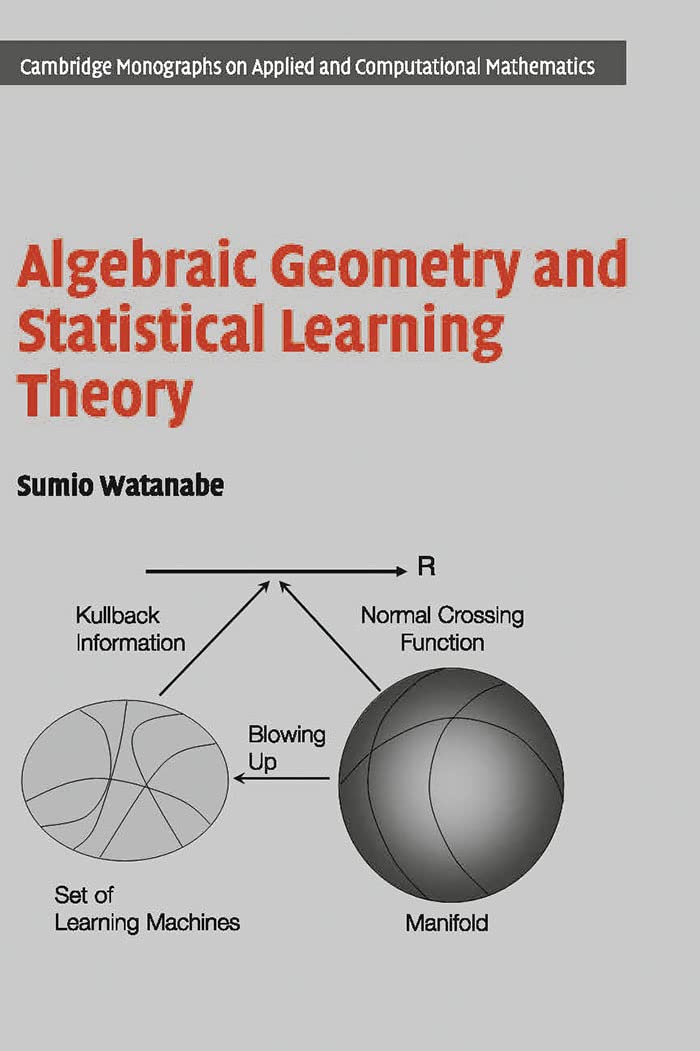

Statistical learning theory is now a well-established subject, and has found practical use in artificial intelligence as well as a framework for studying computational learning theory. There are many fine books on the subject, but this one studies it from the standpoint of algebraic geometry, a field which decades ago was deemed too esoteric for use in the real world but is now embedded in myriads of applications. More specifically, the author uses the resolution of singularities theorem from real algebraic geometry to study statistical learning theory when the parameter space is highly singular. The clarity of the book is outstanding and it should be of great interest to anyone who wants to study not only statistical learning theory but is also interested in yet another application of algebraic geometry. Readers will need preparation in real and functional analysis, and some good background in algebraic geometry, but not necessarily at the level of modern approaches to the subject. In fact, the author does not use algebraic geometry over algebraically closed fields (only over the field of real numbers), and so readers do not need to approach this book with the heavy machinery that is characteristic of most contemporary texts and monographs on algebraic geometry. The author devotes some space in the book for a review of the needed algebraic geometry.Also reviewed in the initial sections of the book are the concepts from statistical learning theory, including the very important method of comparing two probability density functions: the Kullback-Leibler distance (called relative entropy in the physics literature). The reader will have to have a good understanding of functional analysis to follow the discussion, being able to appreciate for example the difference between convergence in different norms on function space. From a theoretical standpoint, learning can be different in different norms, a fact that becomes readily apparent throughout the book (from a practical standpoint however, it is difficult to distinguish between norms, due to the finiteness of all data sets). Of particular importance in early discussion is the need for "singular" statistical learning theory, which as the author shows, boils down to finding a mathematical formalism that can cope with learning problems where the Fisher information matrix is not positive definite (in this case there is no guarantee that unbiased estimators will be available). This is where (real) algebraic geometry comes in, for it allows the removal of the singularities in parameter space by recursively using "blow-up" (birational) maps. The author lists several examples of singular theories, such as hidden Markov models, Boltzmann machines, and Bayesian networks. The author also shows to generalize some of the standard constructions in "ordinary" or "regular" statistical learning to the case of singular theories, such as the Akaike information criterion and Bayes information criterion. Some of the definitions he makes are somewhat different than what some readers are used to, such as the notion of stochastic complexity. In this book it is defined merely as the negative logarithm of the `evidence', whereas in information theory it is a measure of the code length of a sequence of data relative to a family of models. The methods for calculating the stochastic complexity in both cases are similar of course.In singular theories, one must deal with such things as the divergence of the maximum likelihood estimator and the failure of asymptotic normality. The author shows how to deal with these situations after the singularities are resolved, and he gives a convincing argument as to why his strategies are generic enough to cover situations where the set of singular parameters, i.e. the set where the Fisher information matrix is degenerate, has measure zero. In this case, he correctly points out that one still needs to know if the true parameter is contained in the singular set, and this entails dealing with "non-generic" situations using hypothesis testing, etc.Examples of singular learning machines are given towards the end of the book, one of these being a hidden Markov model, while another deals with a multilayer perceptron. The latter example is very important since the slowness in learning in multilayer perceptrons is widely encountered in practice (largely dependent on the training samples). The author shows how this is related to the singularities in the parameter space from which the learning is sampled, even when the true distribution is outside of the parametric model, where the collection of parameters is finite. This example leads credence to the motto that "singularities affect learning" and the author goes on further to show to what extent this is a "universal" phenomenon. By this he means that having only a "small" number of training samples will bring out the complexity of the singular parameter space; increasing the number of training samples brings out the simplicity of the singular parameter space. He concludes from this that the singularities make the learning curve smaller than any nonsingular learning machine. Most interestingly, he speculates that "brain-like systems utilize the effect of singularities in the real world."

A**K

Good but requires a second edition

This book gives - or could give, as I will soon explain - a very approachable dive into an important theoretical topic that both theoreticians and practitioners in the field of machine learning or data science should learn. However, the author's transition between definitions/statements/remarks/theorems is not so straight-forward or clear. It is seldom "easy" - as the author puts it - to see why an equation holds, and yet at other times the author wastes space on trully easy to prove theorems. Add to this some clumsy english - easily fixed by closer attention to editing - and some typographical errors in a few formulas, and the book starts to become slightly frustrating to go through because it reads more like a manuscript/paper, where you need to verify every step rather than take the author at his word. And so, you are left flipping back-and-forth many times, and if you are not familiar with - i.e. comfortable with - the topics introduced in the first 3 chapters, then I suspect that this is a very difficult read.That being said, it is a damn shame that such small things should hold back such great content. As such, the book NEEDS a second edition, especially since we do not have enough books covering such topics. Nevertheless, I think that if a reader has a strong background in measure theory, differential geometry, and abstract algebra and the proper motivation then they could get through this book.

T**N

Must-read but reeeally hard

Today program development is guided by best practices, traditions, and downright doctrinal beliefs.Machine learning in particular has always been a set of magical techniques, especially when applied to linguistic problems.Pr Watanabe is paving the way to a world where it is an actual science.This is one of the most important books I know for the future of computer science engineering.The problem is that reading this requires übernerd level mathematics background *and* mindset (do not wait for too much pedagogy). So chances are, if you are of mathematician breed you will love it ; if your cursus is computer science engineering, it willl make you cry.

B**C

Highly recommended

Love this book! Can’t wait to continue to dig in.

P**N

interesting but needs more explanation

I think I have more than enough background in algebraic geometry and other math/physics to understand the content.But in the first chapter the author introduces many examples from statistics, along with them is tedious calculations, without motivation for me. Boring!

Trustpilot

2 days ago

4 days ago